The 1993 sci-fi adventure film Jurassic Park sees scientists rebirth extinct dinosaurs using genetic engineering of preserved DNA fragments, with predictably disastrous results. One of the most memorable lines in the film is chaos theorist Dr Ian Malcolm’s striking criticism of the park: “Your scientists were so preoccupied with whether they could, that they didn’t stop to think if they should.” As well as a bygone meme, this line conveniently serves as a simplified summary of the field of scientific ethics. And as the definition of what scientists can do widens, it becomes ever more important to consider the potential social, political and economic consequences of new advancements.

While it may seem obvious to us now that innovation in science & technology does not exist in a vacuum, but rather informs and is informed by societal order, this was not always addressed in academic circles. Until relatively recently, the ‘value-free thesis’ prevailed. This is based on the idea that science is completely objective and deals only with facts, with decisions based purely on evidence rather than economic, political, moral or epistemic values. Scientists carry out the research, while politicians and the public address the consequences (Resnik & Elliot, 2016). Now, however, it is widely accepted that scientists have a responsibility to acknowledge the implications of new knowledge.

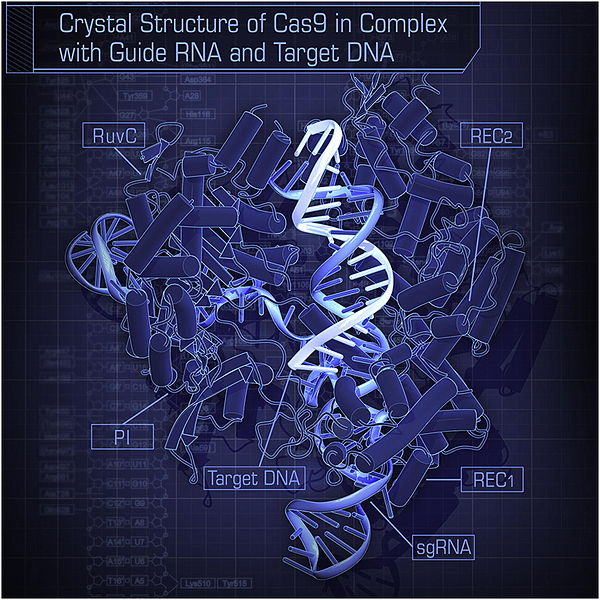

One of the biggest topics in bioethics at the moment is the rise of new genome editing technologies, such as CRISPR. CRISPR uses the Cas9 enzyme, found in bacteria as a defence mechanism against viral infection, to cleave DNA at selective sites. In this way, gene expression can be turned off, or disease-causing genes removed and new, healthy alleles inserted into the site. Originally hailed as revolutionary, the safety of CRISPR has since been called into question. In 2017, it was claimed that CRISPR-editing caused unexpectedly high off-target mutations in mice, but this was later withdrawn. Just last June, however, gene correction via CRISPR was linked to increased risk of developing cancer. The general consensus between researchers and bioethicists is that the benefits of germline genome editing in humans, i.e. edits that are permanent and will be passed onto children, do not yet outweigh the risks. Hence, it should not be used for clinical purposes until proven safe.

Crystal structure of the Cas9 enzyme, used in CRISPR genome editing.

© Nishimasu et al / https://dx.doi.org/10.1016/j.cell.2014.02.001 / CC-BY-SA-3.0

The use of human genome editing beyond clinical treatments is also teeming with potential social side-effects. Private access to these technologies will disproportionately benefit wealthy individuals, and differential uptake according to cultural values could also widen existing inequalities. We also need to think about which conditions would be targeted and potentially eradicated. Disability activists often argue that ‘curing’ many non-life-threatening conditions would erase the identity and culture of affected individuals.

Furthermore, the lines between treatment and enhancement are easily blurred. If it became possible, would it be wrong to alter genetic predisposition for preferred ‘normal’ characteristics, such as height, weight, eye colour or skin colour? Would this simply increase existing pressure to conform to patriarchal and Eurocentric beauty standards? At what point do we consider genome editing to be eugenics? These are just some of reasons that many countries have banned human germline editing.

Another technological innovation with potentially dangerous applications is the use of artificial intelligence. A 2017 study created an algorithm able to correctly determine the sexuality of subjects up to 91% of the time, by examining facial features, expressions, and grooming styles. While these findings suggest interesting information about the biological origins of sexuality, the researchers highlighted the potential for detection of people’s sexuality without their knowledge or consent. The hypothetical applications of this algorithm in the hands of anti-LGBT governments is a sobering thought. In fact, the authors of the paper considered not publishing their findings to avoid “enabling the risks [they were] warning against”, but ultimately decided the public should be aware of these risks in order to “take preventative measures.” Whether this was the right decision is understandably contentious.

A comparable situation emerged from research into the bird flu (H5N1) virus back in 2011. Mutations were identified that could make the virus more infectious to humans, sparking fears that a lab-engineered version of H5N1 could be used as a biological weapon. While the results were ultimately published, despite the National Science Advisory Board for Biosecurity’s proposal that they should not be made public, this led to a year-long voluntary moratorium on H5N1 gain-of-function studies.

Climate change is arguably the most ubiquitous contemporary issue in science. The vast, vast majority of climate scientists will agree that current global warming is inadvertently caused by human activities, such as the burning of fossil fuels or large-scale agriculture. But what if humans were to intentionally modify the Earth’s climate to counteract rising temperatures?

Geoengineering, or climate intervention, is receiving growing attention as a method of limiting the effects of climate change. It generally takes two forms: removal of greenhouse gases and managing solar radiation input. But many would argue that there is an ethical distinction between purposely changing the climate and doing so accidentally – do we have the right to alter the balance of natural global systems, or a responsibility to right the wrongs of human-initiated global warming? Furthermore, there are fears that large-scale geoengineering could reduce political pressure to cut down on carbon emissions, with the consensus among experts being that climate intervention should not replace mitigation of climate change in the first place. Events such as the U.S. Trump administration cancelling NASA research into greenhouse gas emissions and pulling out of the Paris climate agreement show that this is already under threat.

Scientific and technological advancement can obviously improve the lives of many, but with potential for good comes inadvertent potential for harm. Ethics training and legislation are fundamental to ensuring the risks of controversial research are considered and minimised, as are guidelines for the ways in which new science is reported. As a society, we need to have open conversations about these difficult issues and only then can we decide on the best way forward.